- A.Amazon Bedrock

In response to the rapid rise of Generative AI and machine learning workloads, AWS introduced numerous enhancements to Amazon Bedrock at AWS re:Invent 2025. We will examine these announcements in detail and provide clarity on their key aspects.

A.Amazon Bedrock

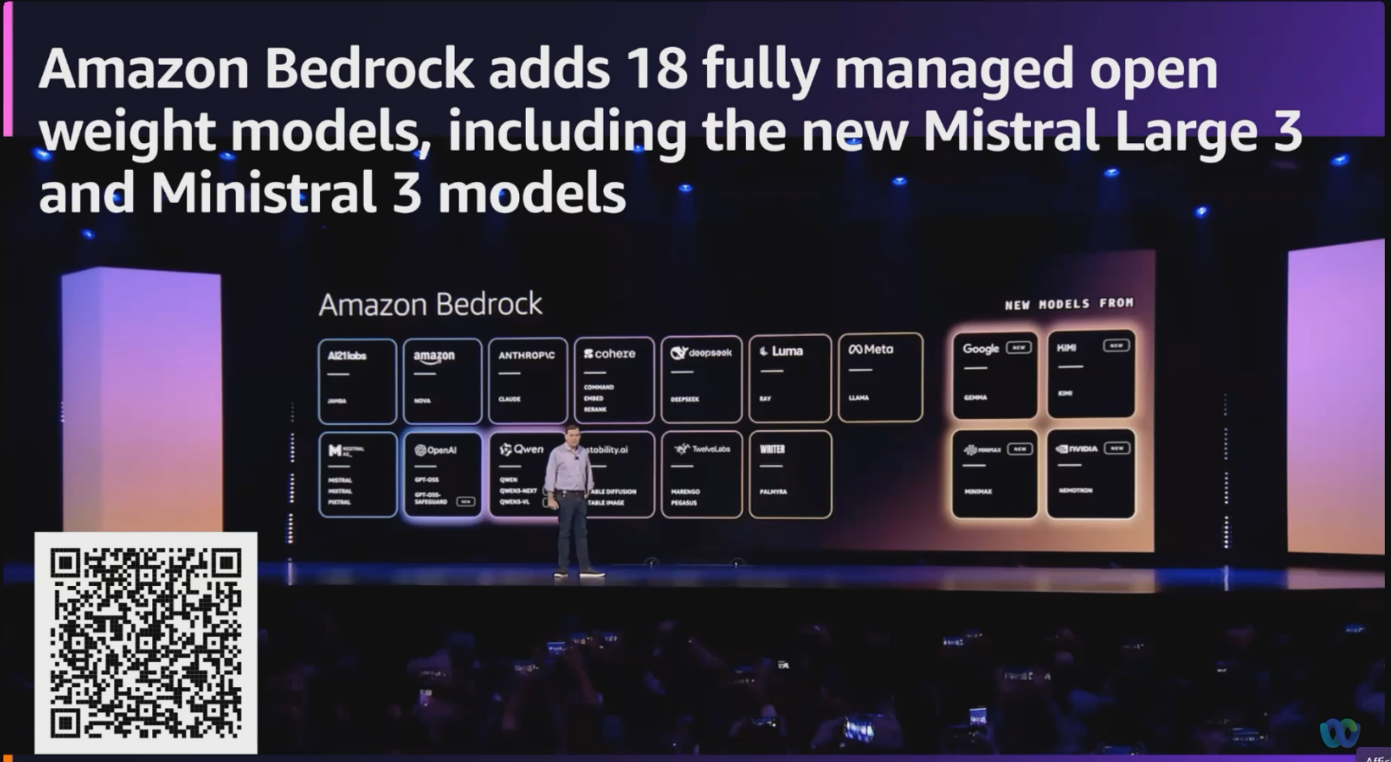

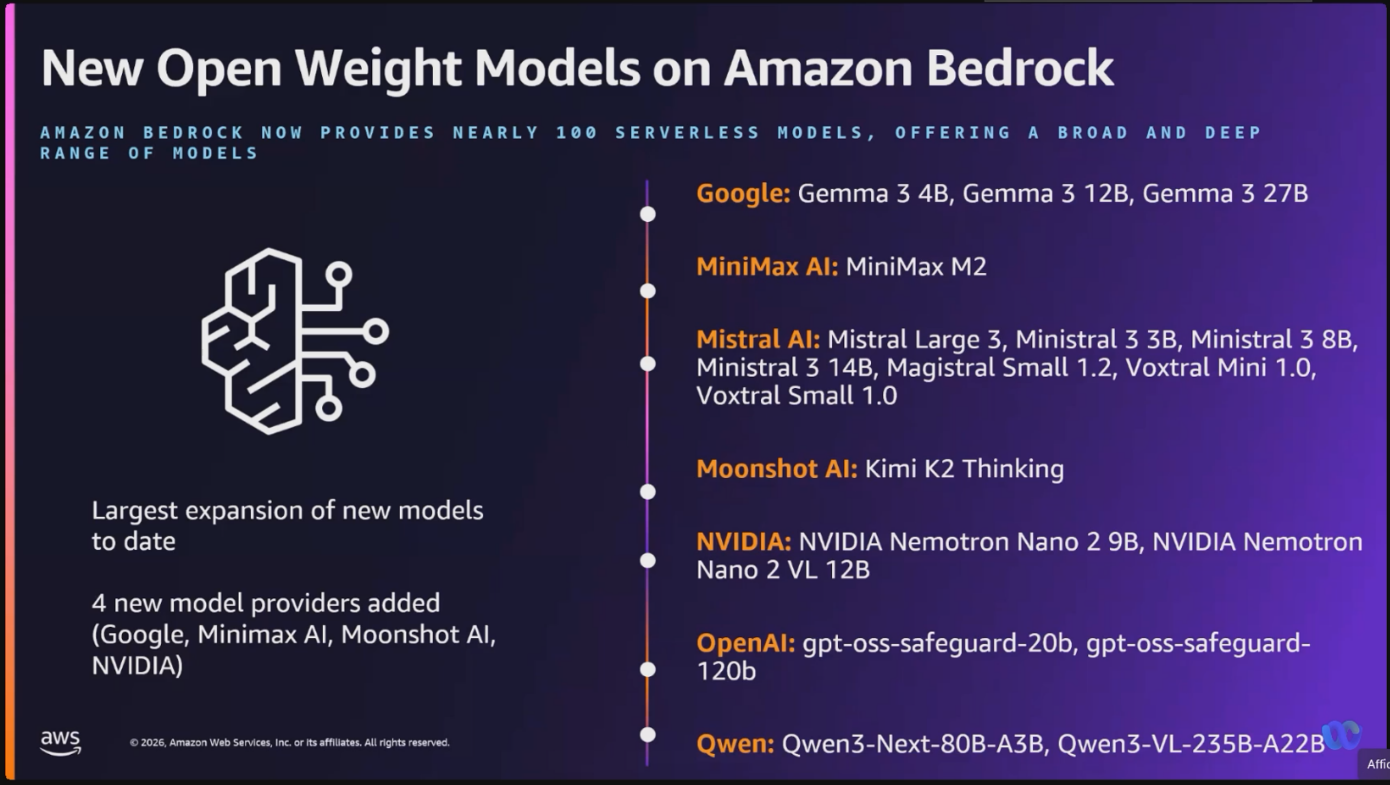

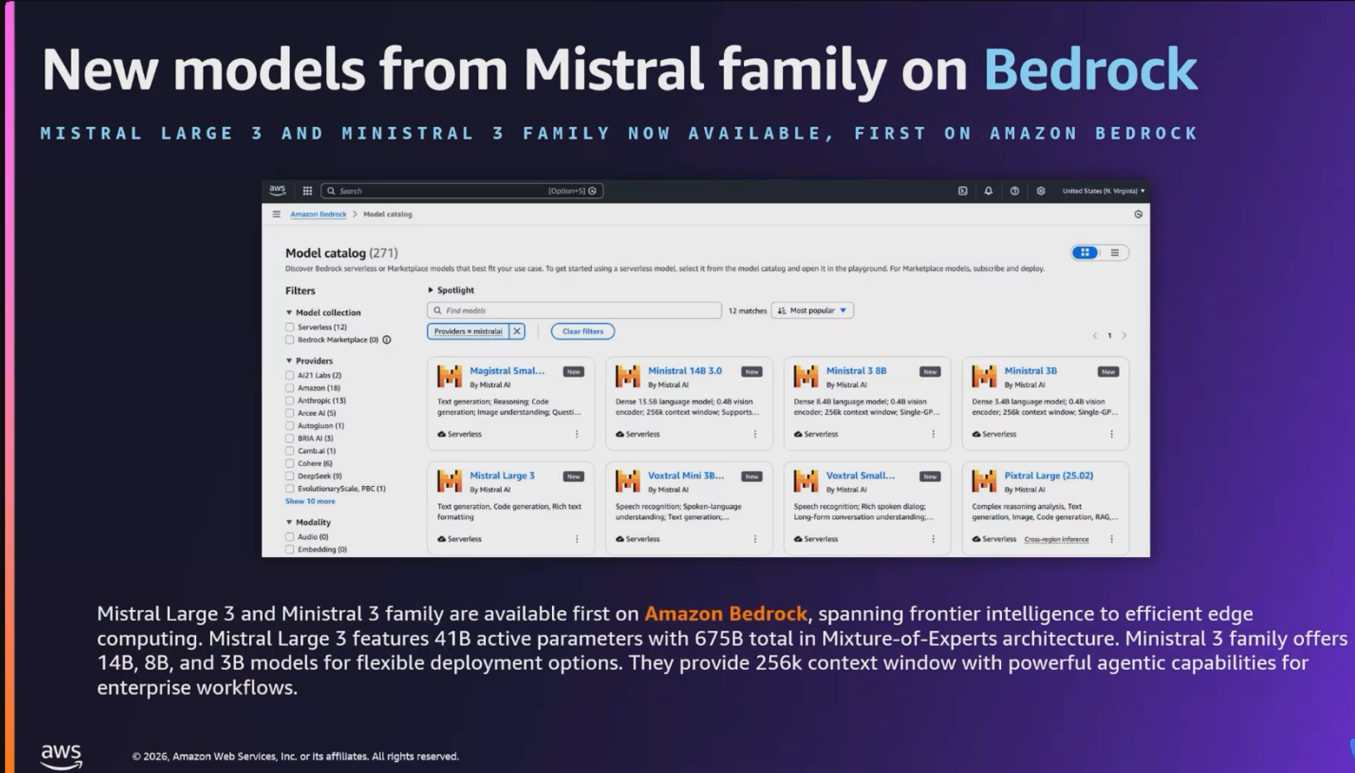

At ReInvent 2025, Amazon announced the general availability of an additional 18 fully managed open weight models in Amazon Bedrock from new providers like Google, MiniMax AI, Mistral AI, Moonshot AI, NVIDIA, OpenAI, and Qwen, including the new Mistral Large 3 and Ministral 3 3B, 8B, and 14B models.

A.1 More open weight model options

You can use these open weight models for a wide range of use cases across industries:

| Model provider | Model name | Description | Use cases |

|---|---|---|---|

| Gemma 3 4B | Efficient text and image model that runs locally on laptops. Multilingual support for on-device AI applications. | On-device AI for mobile and edge applications, privacy-sensitive local inference, multilingual chat assistants, image captioning and description, and lightweight content generation. | |

| Gemma 3 12B | Balanced text and image model for workstations. Multi-language understanding with local deployment for privacy-sensitive applications. | Workstation-based AI applications; local deployment for enterprises; multilingual document processing, image analysis and Q&A; and privacy-compliant AI assistants. | |

| Gemma 3 27B | Powerful text and image model for enterprise applications. Multi-language support with local deployment for privacy and control.. | Enterprise local deployment, high-performance multimodal applications, advanced image understanding, multilingual customer service, and data-sensitive AI workflows.. | |

| Moonshot AI | Kimi K2 Thinking | Deep reasoning model that thinks while using tools. Handles research, coding and complex workflows requiring hundreds of sequential actions.. | Complex coding projects requiring planning, multistep workflows, data analysis and computation, and long-form content creation with research. |

| MiniMax AI | MiniMax M2 | Built for coding agents and automation. Excels at multi-file edits, terminal operations and executing long tool-calling chains efficiently. | Coding agents and integrated development environment (IDE) integration, multi-file code editing, terminal automation and DevOps, long-chain tool orchestration, and agentic software development. |

| NVIDIA | NVIDIA Nemotron Nano 2 9B | High efficiency LLM with hybrid transformer Mamba design, excelling in reasoning and agentic tasks. | Reasoning, tool calling, math, coding, and instruction following. |

| NVIDIA Nemotron Nano 2 VL 12B | BAdvanced multimodal reasoning model for video understanding and document intelligence, powering Retrieval-Augmented Generation (RAG) and multimodal agentic applications. | Multi-image and video understanding, visual Q&A, and summarization. |

A.2 New Mistral AI models

These four Mistral AI models are now available first on Amazon Bedrock, each optimized for different performance and cost requirements.

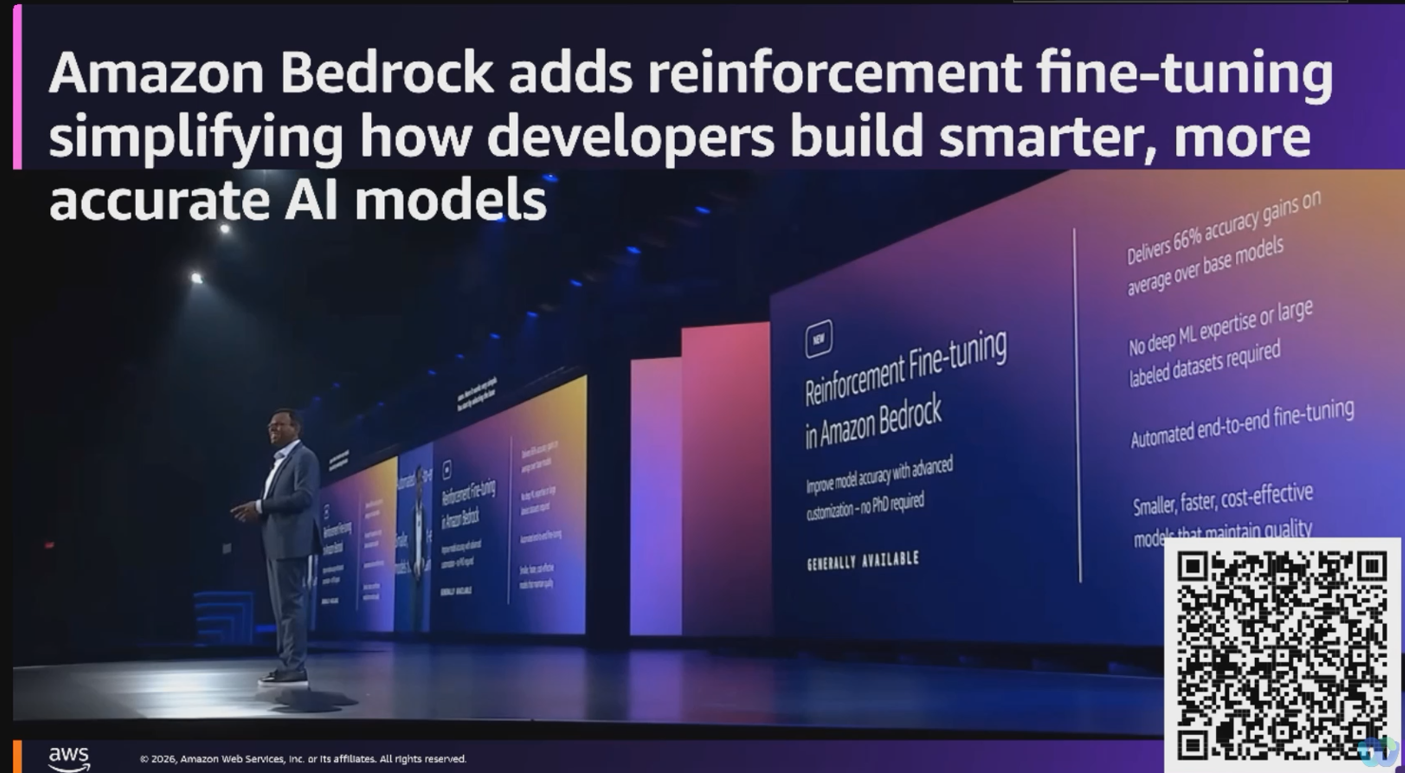

A.3 Reinforcement Fine-tunning

Amazon Bedrock reinforcement fine-tuning enables you to customize foundation models using human feedback, aligning them closely with your business objectives and brand voice. This RLHF-based approach goes beyond simple prompt engineering, allowing models to better understand your requirements and consistently generate more accurate and relevant outputs.

This guide is designed for ML engineers, data scientists, and cloud architects looking to enhance their AI applications through Amazon Bedrock model optimization. It explains how reinforcement learning–based fine-tuning works, why it delivers superior results compared to standard techniques, and how to apply it effectively in production environments.

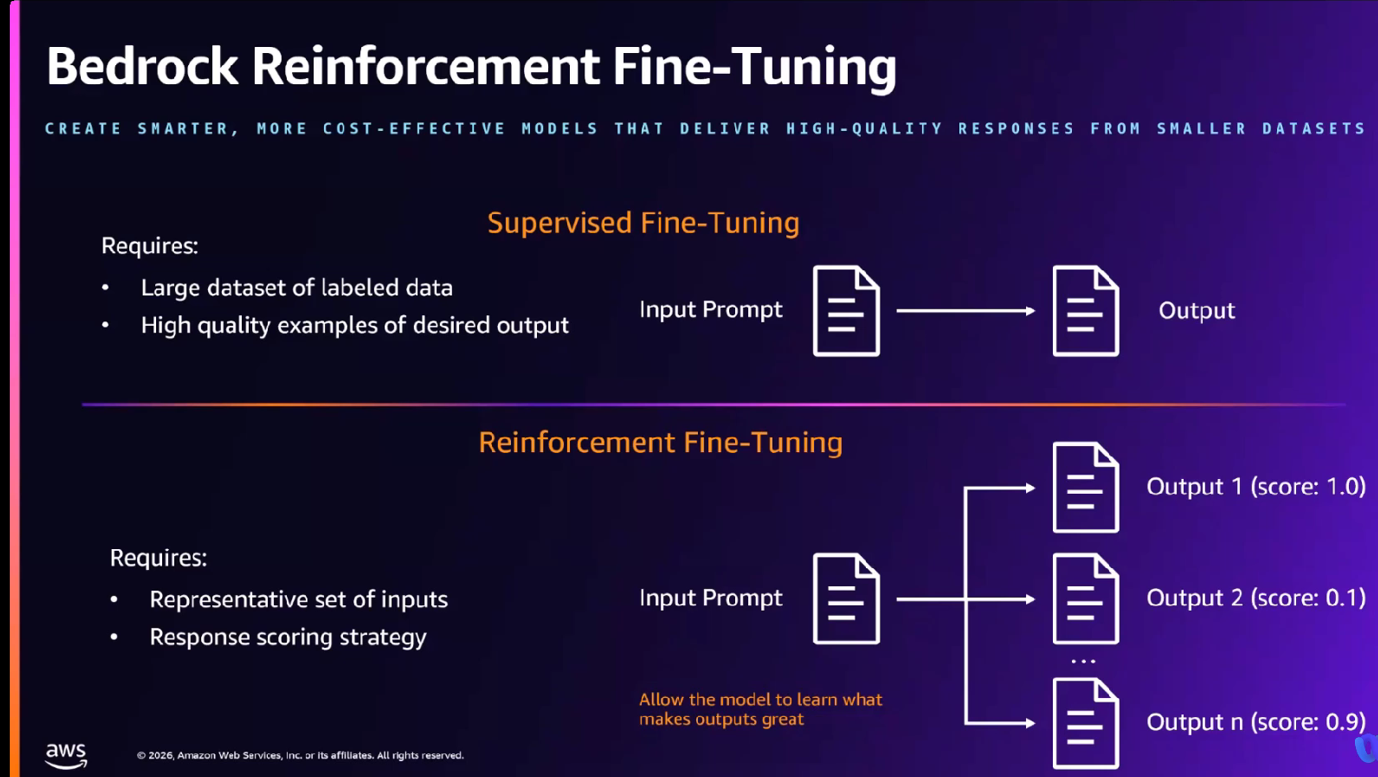

So what is reinforcement fine tuning? It’s a technique that enables you to customize models with the last need of data.

So compared to supervised fine tuning, right, you would have to come up with a large data set of label data like telling the model, this is the good responses that I want. And you would need thousands, maybe dozens of thousands of good responses to get the model, you know, prepared to handle these kind of answers.

With reinforcement fine tuning, what you do is that you do a little more work in the data preparation, but you need a lot less data. You give them a representative set of data as an input and you let them know what good looks like, right? With a responses scoring strategy. So with that approach, you can customize the model, achieve greater accuracy with a lot of less data volume to be input to the model.

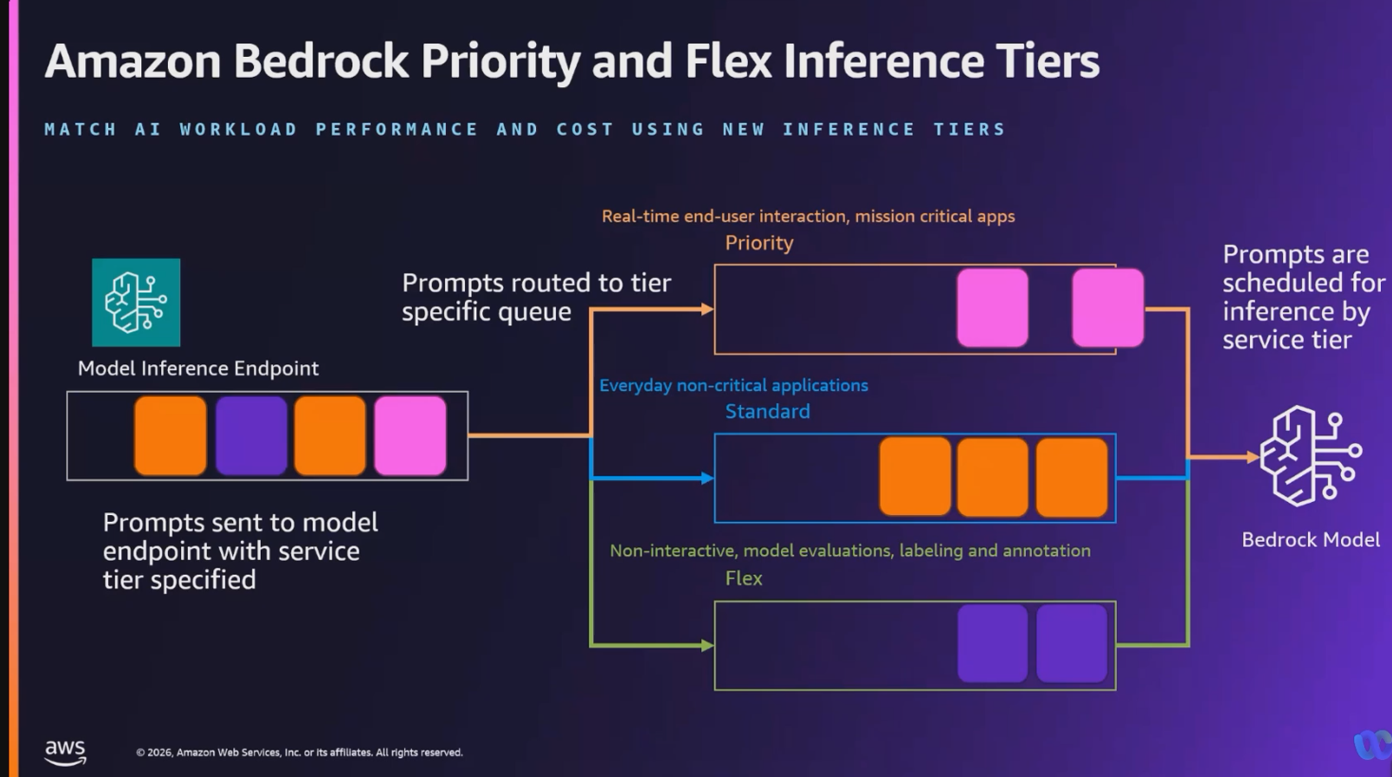

A.4 Amazon Bedrock Inference tiers

The new Flex tier offers cost-effective pricing for non-time-critical applications like model evaluations and content summarization while the Priority tier provides premium performance and preferential processing for mission-critical applications. For most models that support Priority Tier, customers can realize up to 25% better output tokens per second (OTPS) latency compared to standard tier. These join the existing Standard tier for everyday AI applications with reliable performance.

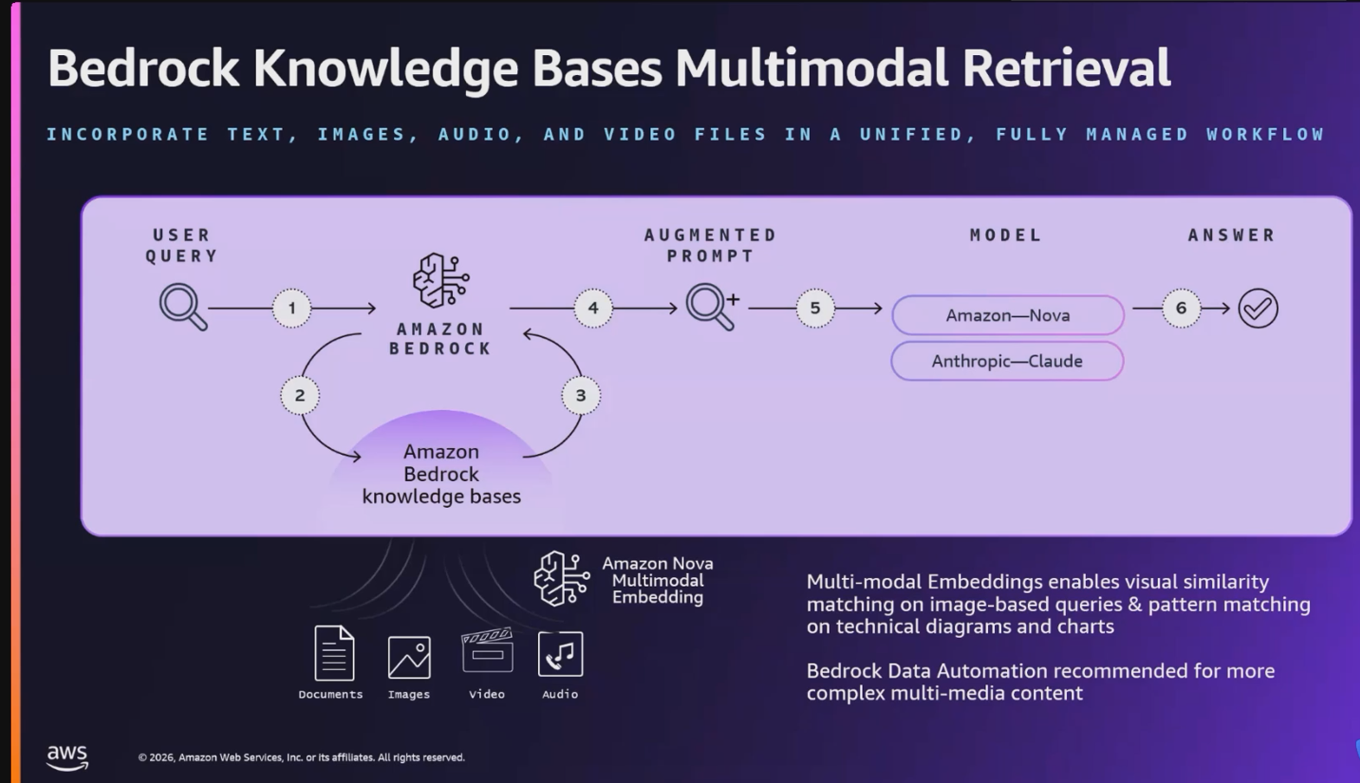

A.5 Amazon Bedrock knowledge base multimodel Retreival

Now you can have in the knowledge base data indexation, not only by the text search, but also by image and video similarity and all the similarity, simplifying how you store and how you search data that you’re going to use to augment your application.

A.6 Amazon Bedrock Agentcore

Agents are autonomous softwares that leverage AI to reason, plan and complete tasks on behalf of human systems.

The key value proposition of Agent Core is its support for any model and any agent framework. Whether you’re using Anthropic, OpenAI, Amazon Nova, or other foundation models, Agent Core works seamlessly with them. It also integrates with popular agent frameworks such as LangChain, CrewAI, and Amazon’s own Strands Agents. This broad compatibility makes Agent Core an ideal platform for building and scaling agent-based capabilities.

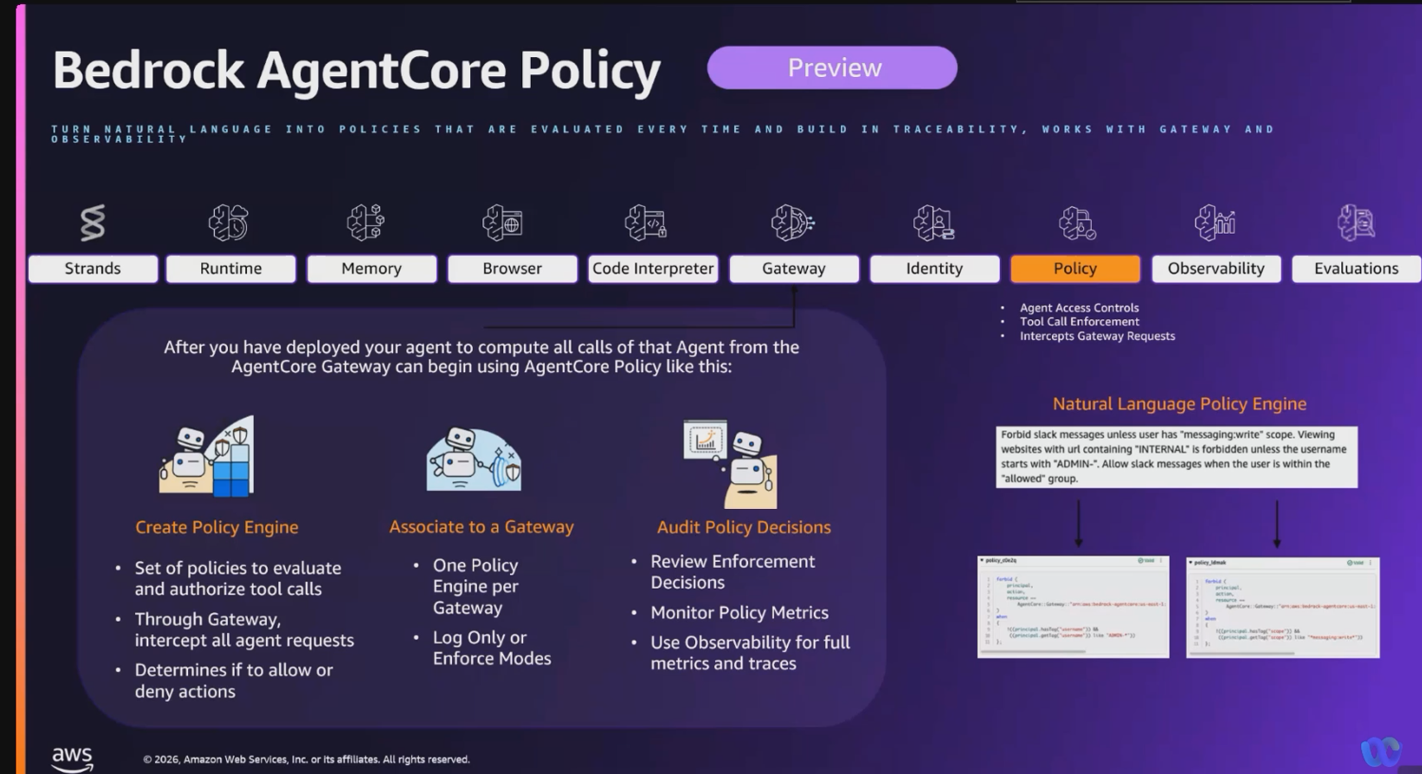

A.6.1 Amazon Bedrock Agentcore Policy

It’s a natural language policy engine that automatically converts your policies that you’ve written in natural language (in plain English, french, etc ….) into code.

For exammple, if you are a financial services firm, you can say agent cannot access customer banking data outside of business hours or from non-approved geographic regions. The system automatically converts this into enforceable policies.

How does it work??

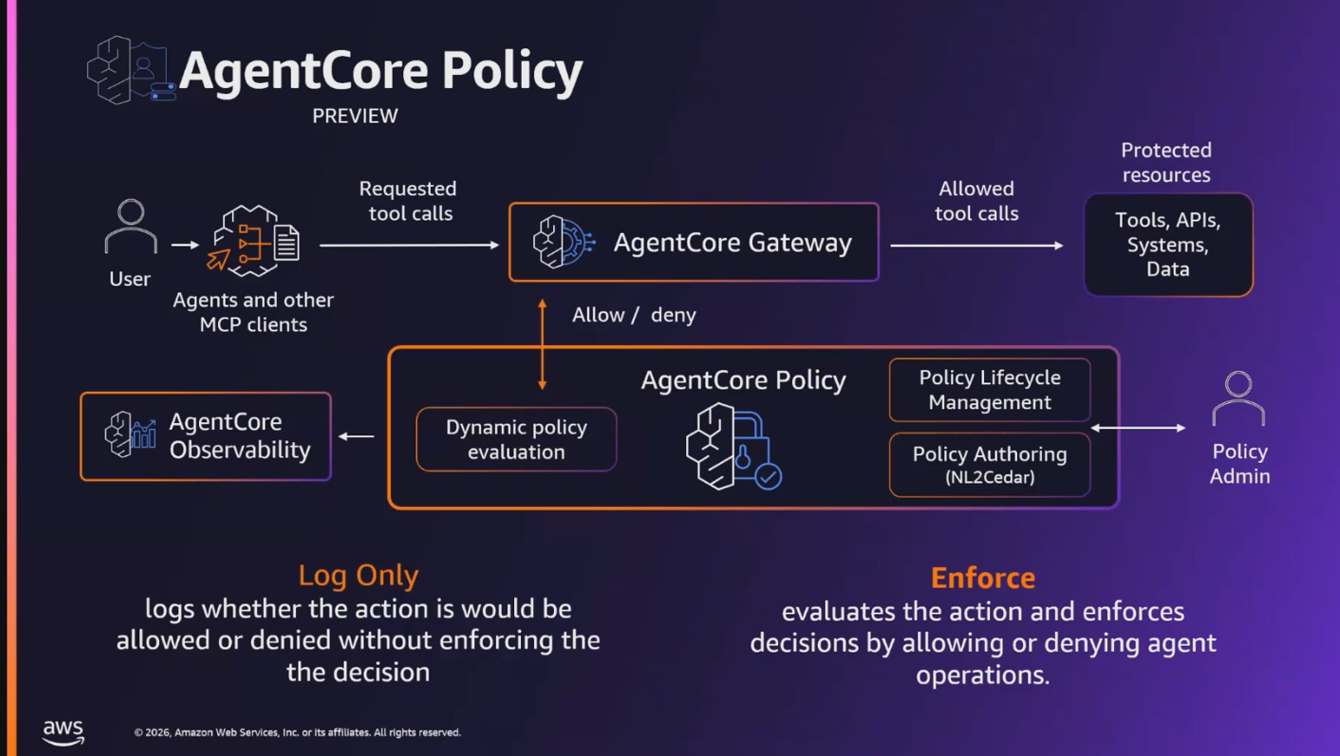

So agent core policy is tied to the gateway, which is the gateway to access all the different APIs and resources. Every single request flows through gateway.

As you can see here, the policy intercepts it to evaluate against the defined rules that you have.

You can run it in two modes.

-

One is the log only mode for auditing and understanding agent behavior patterns

-

Enforcement mode to actively block unauthorized actions.

This gives you complete control over which tools agents can access, what data they can interact with and what actions they permitted to perform.

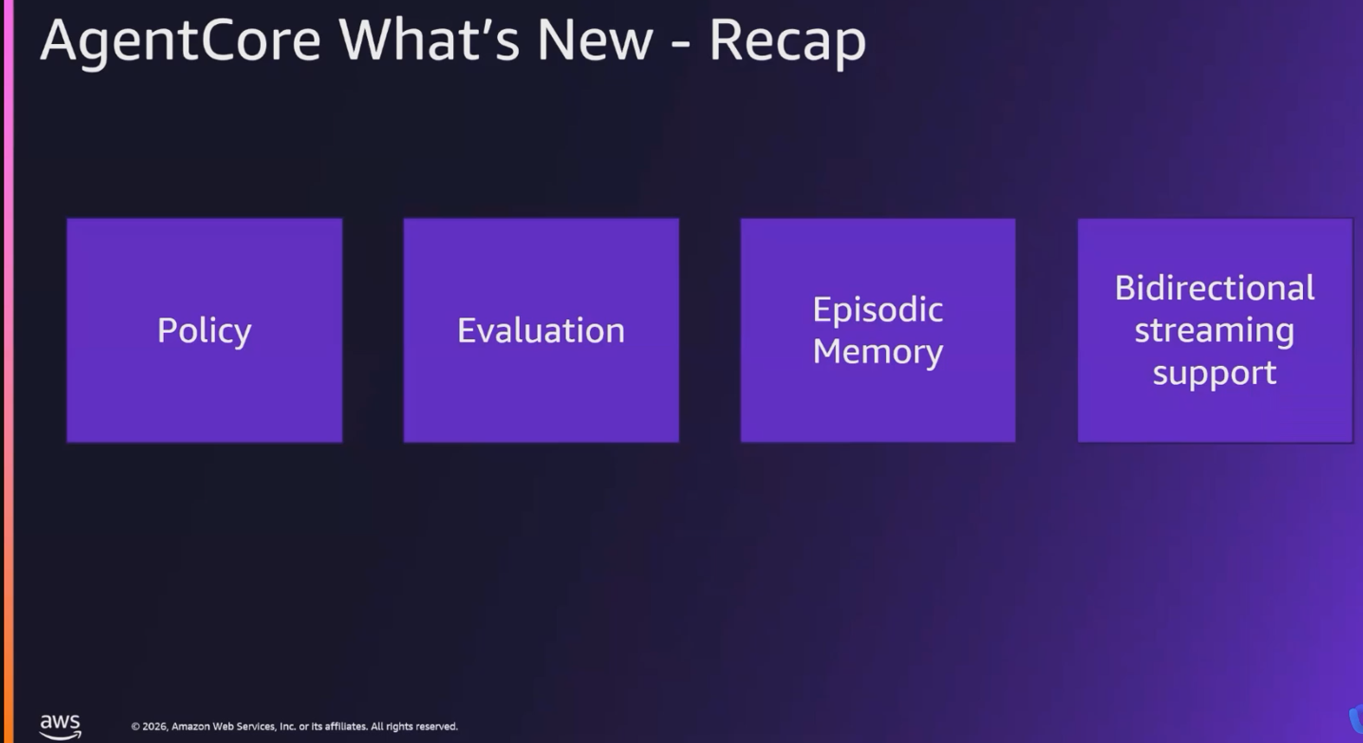

A.6.2 Amazon Bedrock Agentcore Evaluations

It provides the continuous automated quality monitoring with built-in evaluators. We have about 13 built-in evaluators that cover the full spectrum of agent performance.

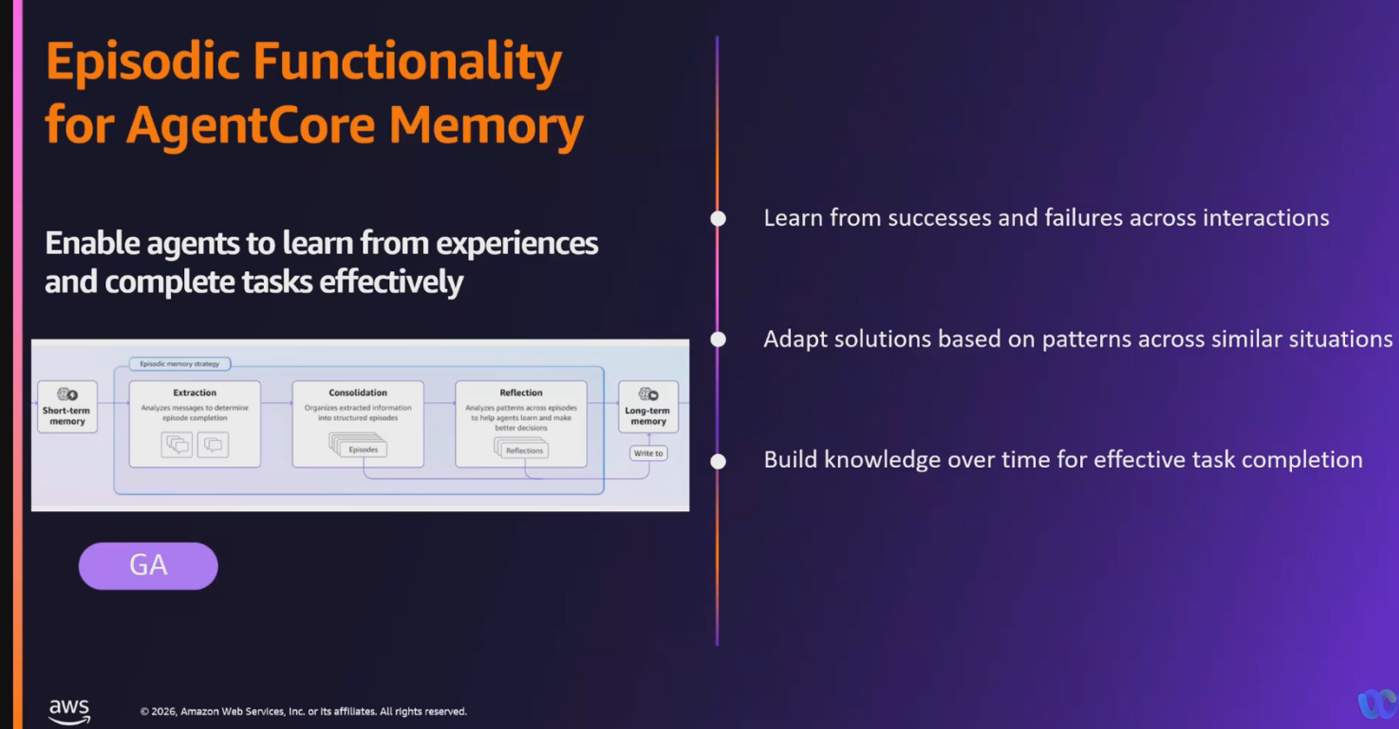

A.6.3 Episodic Functionality of AgentCore Memory

Episodic memory enables agents to recognize patterns and learn from experience.

Instead of storing every single raw event, it identifies important moments, summarizes them into compact records and organizes them as episodes.

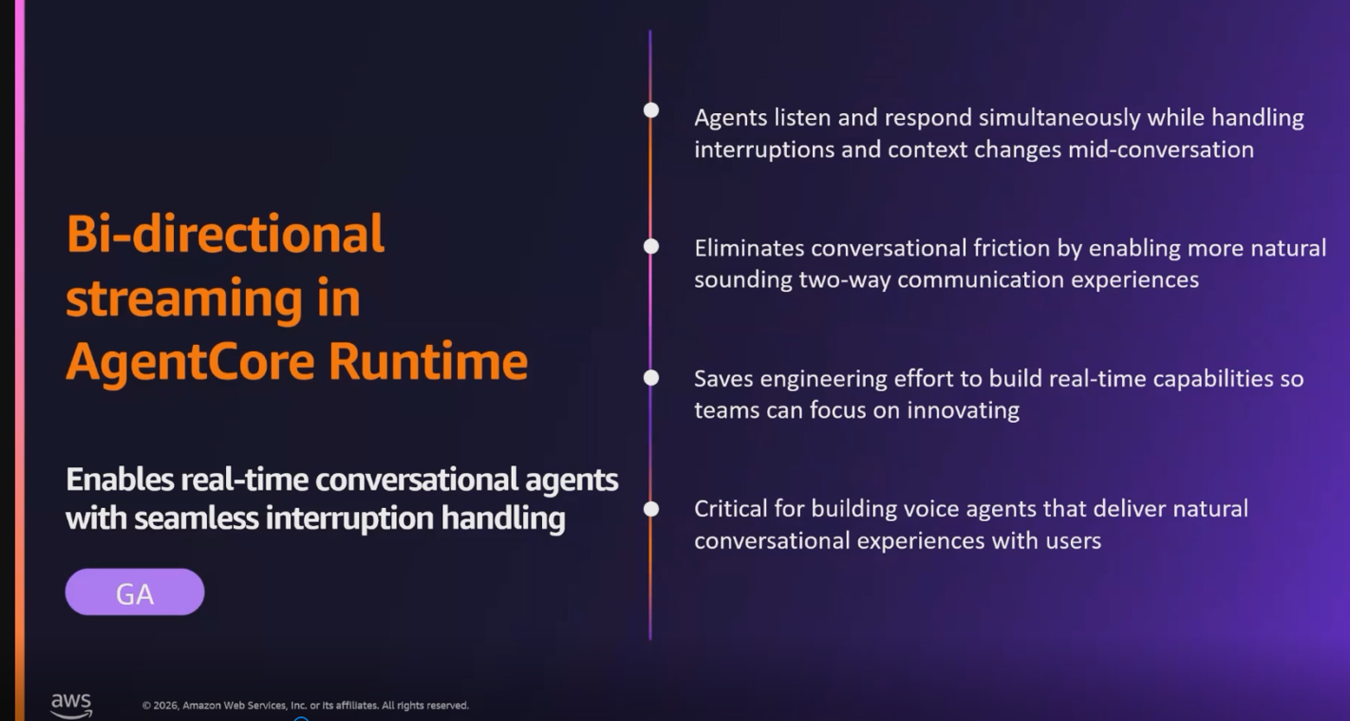

A.6.4 Bi-directional streaming in AgentCore Runtime

Bi-directional streaming memory enables rfeal-time conversational agents with seamless interruption handling.

.png)

.png)